The Need for Continuous Training in Computer Vision Models

A computer vision model that works under one lighting condition, store layout, or camera angle can quickly fail as conditions change. In the real world, nothing is constant, seasons change, lighting shifts, new objects appear and cameras have been repositioned.

In order to keep computer vision models accurate and reliable, organizations need to develop scalable training and retraining pipelines that can adapt to changing conditions.

A training pipeline is a sequence that takes real-world data to benchmark results and evaluate performance. This consists of many components including selecting the data, organizing the datasets, feeding the data into the model, testing the models performance and logging the output. However, the interdependence of these processes means that errors can disrupt the entire pipeline, making it difficult to debug and maintain.

Why Retraining Matters in Computer Vision

Data in the real world is dynamic and messy. Unlike fixed datasets used in controlled environments, live data is constantly changing. For example, in retail, a camera might accurately identify empty shelves initially, but new product packaging or different lighting can disrupt the system’s accuracy. In manufacturing, a camera watching a production line might not be able to recognize parts if machinery or materials are updated.

This phenomenon is known as model drift, occurs when a model’s accuracy degrades due to shifts in the data distribution. Without retraining, businesses risk losing accuracy, experiencing poor operational efficiency, and even reputational damage. A computer vision system that once achieved 90% accuracy can drop to 75% or lower within months if left unattended.

The solution is continuous retraining. By constantly updating models with up-to-date data, organizations can maintain peak performance, keep pace with evolving conditions, and obtain maximum return on AI investments.

However, retraining also comes with costs—both in compute and data management. Frequent retraining, especially for vision systems deployed across hundreds of locations, can require significant cloud resources, storage, and engineering time. For instance, retraining a model on new shelf images or lighting conditions might mean processing terabytes of video data. To minimize costs, many organizations adopt hybrid approaches, using smaller incremental updates or retraining only when data drift exceeds certain thresholds.

Building a Scalable Training and Retraining Pipeline

Every vision AI system requires a continuous training and retraining loop. This loop ensures models evolve with the world they are observing, maintaining accuracy when faced with changing conditions.

Key Components of a Scalable Pipeline:

1. Automated Data Ingestion and Filtering

Edge devices produce raw visual data continuously. A scalable system automatically ingests, filters, and stores this data, choosing only the most useful examples for retraining.

2. Labeling and Curation

Successful model retraining needs good quality labels. Automated labeling, validation, and active learning can be used to ensure data is accurately annotated with few errors that will negatively impact model performance.

3. Model Retraining

Retrain models on curated datasets either at regular intervals (e.g., every month) or event-driven when performance drops below a defined threshold. Incremental learning can also be used to incrementally adapt models without retraining from scratch.

During retraining, the new data is integrated into the existing model’s learning process. Depending on the chosen approach, this might mean fine-tuning specific model layers (to preserve prior knowledge) or fully retraining from scratch using a combination of old and new data. The updated model then re-learns visual features influenced by the latest examples—like recognizing a redesigned package, a new lighting condition, or a camera angle shift. This process ensures that the model not only recovers lost performance but also becomes more robust to future variations.

4. Validation and Deployment

Retraining is followed by strict validation of models before deployment. Scalable pipelines automatically test the model against historical and new data to be confident that it meets accuracy thresholds before deploying to edge devices or cloud endpoints.

5. Monitoring and Feedback

Real-time performance metrics are recorded through ongoing monitoring, highlighting when retraining is needed. Feedback loops allow the system to enhance itself over time, with decreasing manual intervention.

.gif?width=1280&height=720&name=Shelf%20Count%20-%20Demo%20Days%20(1).gif)

This example uses logic-based processing (color detector filter) rather than a full custom-trained ML model in this stage of the pipeline

Real-World Example: Retail Computer Vision

For example, a retail company is using computer vision to monitor product inventory. Initially, the system is functioning properly, detecting bare shelves with 95% accuracy. After new packaging designs and displays are introduced, the system begins to misidentify products.

By utilizing an extensible retraining pipeline, the company can:

- Collect new video samples automatically from stores

- Filter and annotate relevant images showing packaging or display updates

- Retrain models efficiently with cloud or hybrid compute infrastructure

- Deploy new models to all stores with minimal downtime

Within weeks, the system's accuracy is restored to 96%,the pipeline continuously tracks model performance and automatically retrains models when performance drops, ensuring consistent accuracy and reliability.

Not only is performance restored but also the company can scale its computer vision application to hundreds or thousands of locations.

Best Practices for Scalable Computer Vision Retraining

Automate Where Possible: Retraining is tedious and prone to human error. Automate data ingestion, labeling, and training triggers to maintain performance at scale.

Prioritize High-Impact Data: Data is not all created equal. Prioritize edge cases or situations where models perform worst.

Monitor Continuously: Monitor model accuracy, error rates, and other drift metrics to know when retraining is needed.

Leverage Incremental Learning: Update models incrementally without full retraining cycles to save resources.

Offer Reproducibility: Use version control over datasets and models to provide reproducible outcomes and facilitate audits.

Build Vision AI Better with Plainsight

In computer vision, deploying a model is only the beginning. Real-world conditions constantly change, and models that fail to adapt can quickly lose accuracy. Scalable model training and retraining pipelines are essential to keep AI systems reliable and adaptive.

With scalable infrastructure, automated workflows, and continuous monitoring, organizations can transform computer vision from a static tool into a living, evolving system.

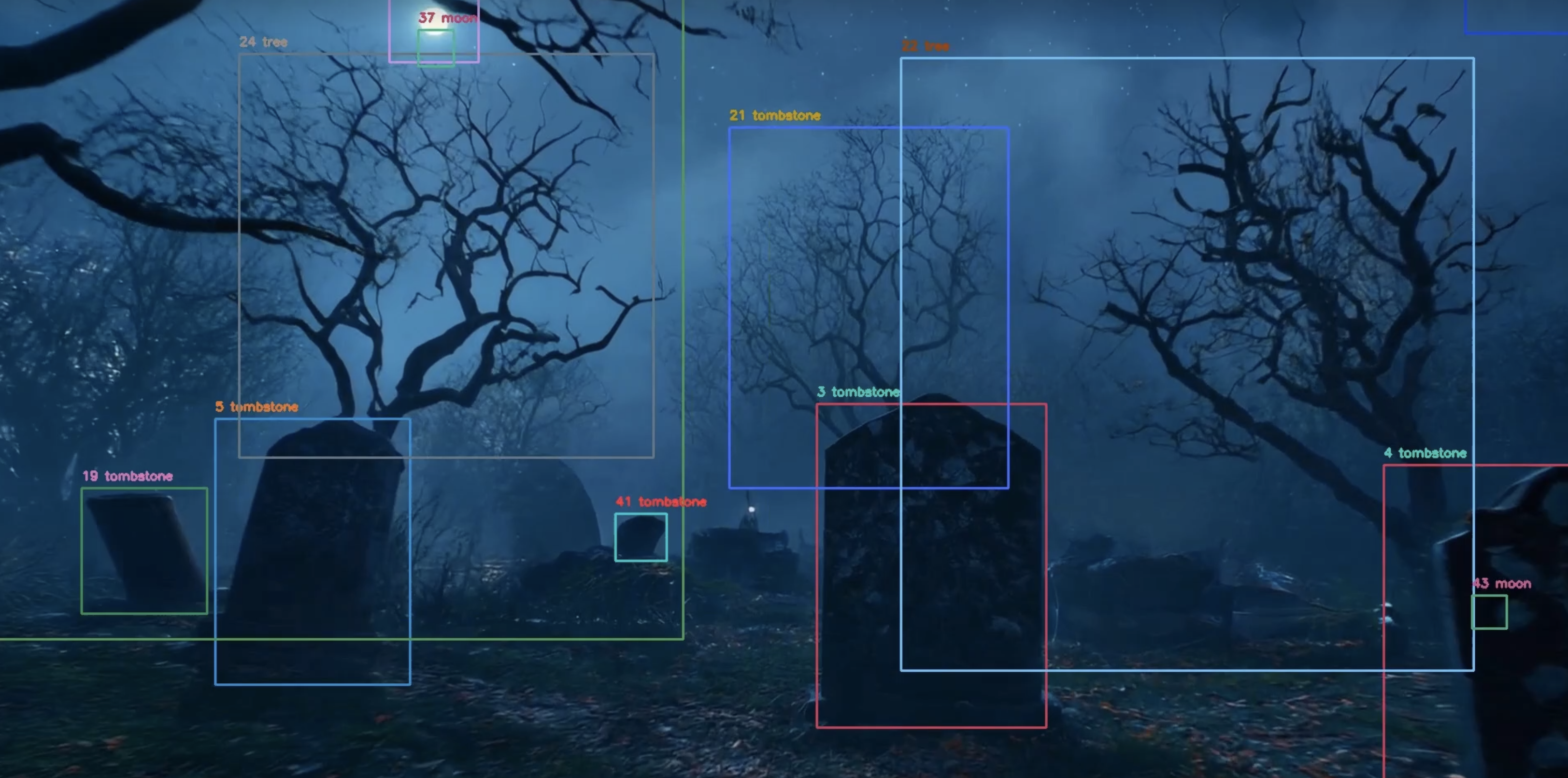

The Plainsight Platform offers custom models and filters to train on a large scale, with retraining capabilities to update models based on incoming data. It intelligently balances cost and performance, automating retraining only when data drift is detected or accuracy thresholds are breached, ensuring efficient use of resources. By automating retraining and integrating continuous feedback loops, Plainsight ensures your computer vision model will scale with you.

Explore the Plainsight Platform and see how your vision AI can evolve in real time.

You May Also Like

These Related Stories

ChatTag: Bringing ChatGPT Vision to Image Annotation in OpenFilter

.png)

What is Infrastructure & Why is it Important?

No Comments Yet

Let us know what you think