Every day we hear what businesses want out of Computer Vision in order to deliver real value: more accurate data, scalability, data privacy, and maintainable systems. These are non-negotiable requirements, so why is it such a challenge to get all of them in one solution? Fundamentally, it comes from building and maintaining the models that power Computer Vision applications.

Computer Vision involves acquiring, processing, analyzing, and understanding digital images to convert visual information into actionable data. In simpler terms, it’s about transforming what a computer “sees” into something it can “understand” and act upon.

The critical components of Computer Vision are the models that perform tasks such as scene reconstruction, object detection, event detection, and more. Built using geometry, physics, statistics, and learning theory, these models require rigorous training to perform specific tasks, such as recognizing objects in images or tracking movements in a video.

Unfortunately, the central challenge with computer vision models is that they lose performance over time. This degradation can happen due to changes in the environment, variations in the input data, or even the natural evolution of the tasks the model was initially trained for, necessitating ongoing maintenance and retraining to remain effective and relevant.

The Options in the Computer Vision Market

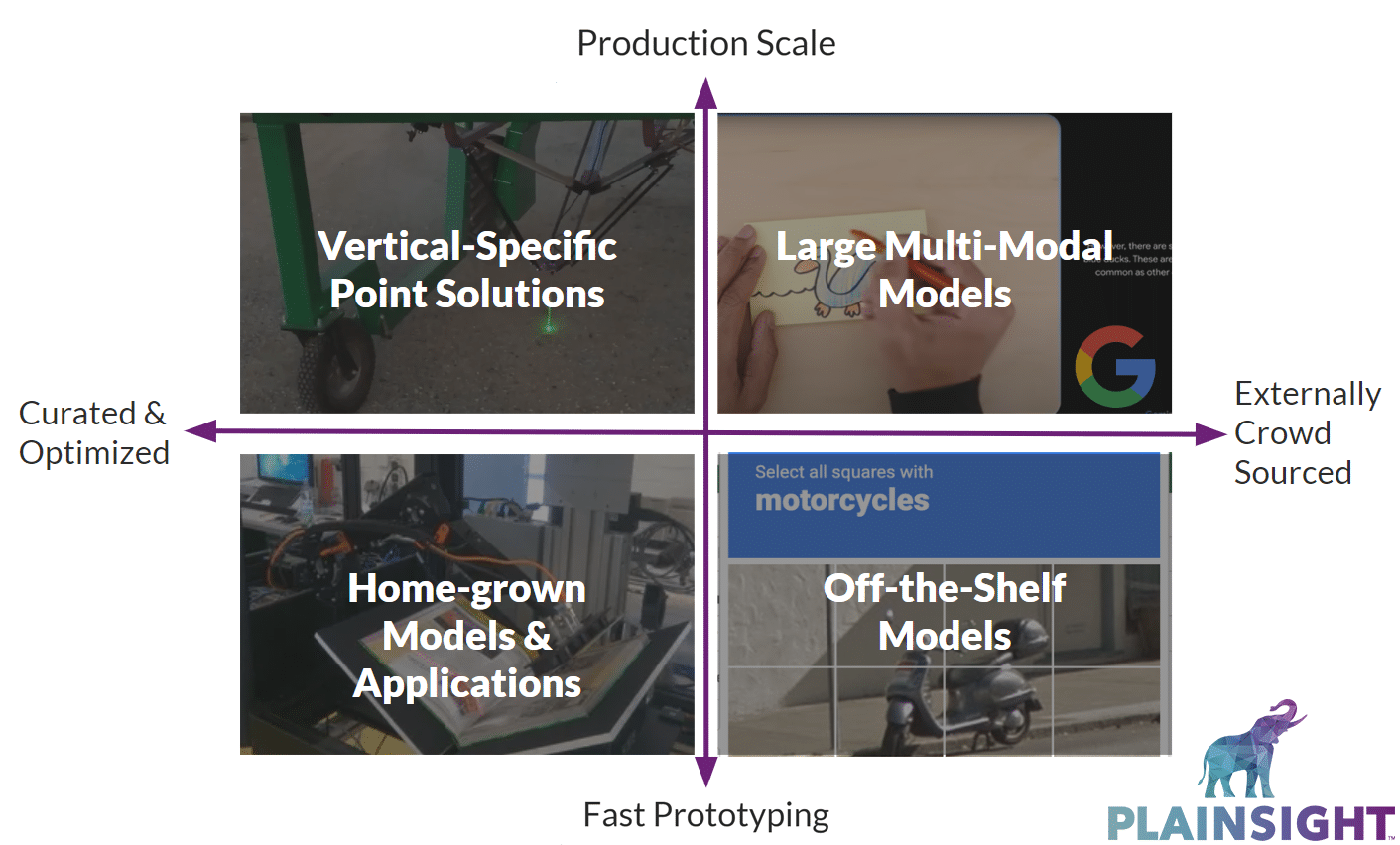

Given the complexity of building and maintaining computer vision models, you’d think there would be lots of great options to choose from depending on your needs, but you’ll quickly see the unacceptable tradeoffs you’re forced to make.

Option 1: Vertical-Specific Point Solutions

Vertical-specific point solutions are complete out-of-the-box systems (usually using dedicated hardware) designed to solve a particular problem within a specific industry or domain. The primary advantage is effectiveness in addressing a singular, well-defined problem, making them easier to implement without significant customization. However, they lack flexibility making them less suitable for businesses with evolving needs, potentially leading to the need for several point solutions and non-scalable costs associated with physical hardware.

If your business faces multiple challenges or if you want to explore new areas of application, these solutions will become limiting. They are not designed to be easily adapted or expanded beyond their original scope. This means that while they can solve the problem at hand, they may not be suitable for businesses with evolving or diverse needs (and how many businesses don’t?). In such cases, companies will find themselves needing multiple point solutions, which complicate integration and increase costs.

Option 2: Large Multi-Modal Models

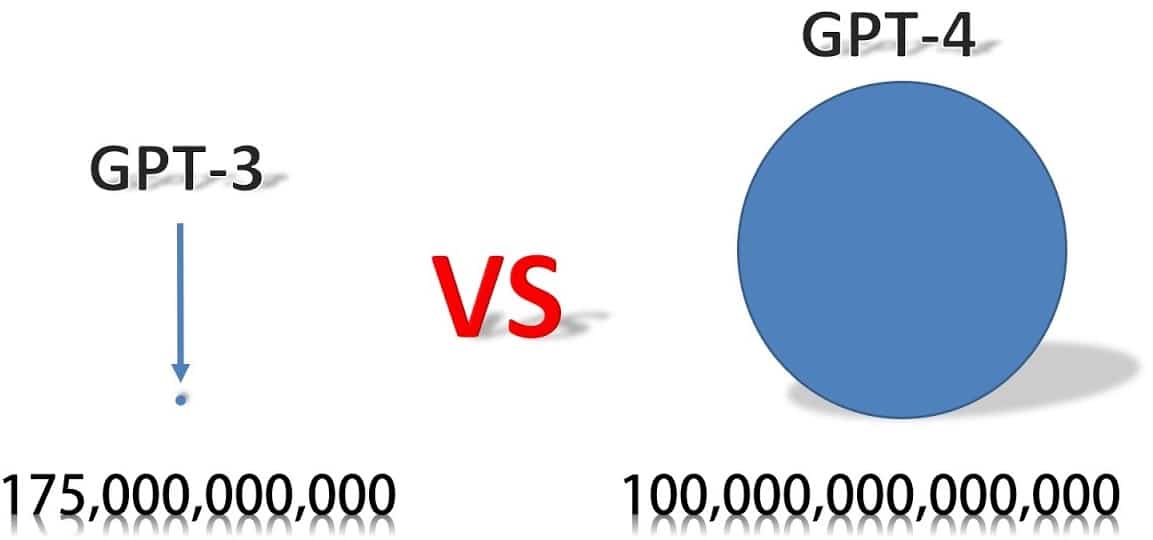

Large multi-modal models, developed by tech giants like OpenAI and Google, represent some of the most advanced AI technologies available today. These models are trained on vast datasets across multiple modalities, such as text, images, and video, allowing them to perform a wide range of tasks.

The primary benefit of these models is their versatility and power. They can handle a broad spectrum of tasks, making them appealing to businesses that need a solution capable of performing various functions. Additionally, these models often come with the backing of extensive research and continuous updates, ensuring they remain at the cutting edge of AI technology.

However, concerns around data privacy surface as these models necessitate sharing proprietary data with these external providers, which may be a deal-breaker for companies prioritizing data confidentiality. They also represent a cost basis that scales on data volume being sent to and processed with the LLM. Since the multi-modal model is so big, computational requirements are enormous and limit the speed at which they can process queries.

The GPT-4 model features 100 Trillion parameters in order to support its many capabilities. Why would a business application need that many to solve a problem?

Option 3: Off-the-Shelf Models

Off-the-shelf models, which include pre-built, open-source, and crowd-sourced options, offer a quick and accessible way to implement computer vision solutions for common tasks such as object detection, facial recognition, or image classification. Many off-the-shelf models are developed by large communities of AI researchers and practitioners, ensuring a high level of reliability and robustness for general-purpose applications.

One of the main advantages of off-the-shelf models is their ease of use and quick deployment. They are ideal for a business unit that needs to get up and running quickly, without the need for extensive customization or development time.

The trade-off for this convenience is that they are not trained on images specific to your use case. While they may work well for general tasks, their accuracy and effectiveness suffer when applied to specialized and unique use cases. Additionally, as you scale your operations or require more complex functionality, these models will not perform as well, requiring significant customization or even a complete overhaul limiting their long-term viability.

Option 4: Home-grown Models & Applications

When faced with the limitations of off-the-shelf solutions, many businesses may consider the option of building their computer vision models and applications from scratch. This approach can be highly appealing, as a custom-built model will be trained for the exact tasks and conditions you encounter, enabling the model to achieve higher accuracy, better performance, and more relevant insights. This is due to the fact that it’s built from your own data and can be run entirely on-premises, allowing you to maintain full ownership of your data and model, eliminating the need to share sensitive information with third-party providers ensuring that your data remains private and secure. It also seems like it’s a tractable project since so many tools are available to make it easier. At least it seems that way.

Even with these areas of expertise, developing a robust computer vision system requires expertise in machine learning, computer vision, and data engineering, along with a powerful infrastructure like computing resources, specialized software, and a robust data pipeline. If your organization has a team with these skills, or you can partner with a trusted expert, this approach can be viable. But it’s essential to recognize that this level of development is resource-intensive, time-consuming, and costly. And it remains so in perpetuity.

The most significant challenge of home-grown models is the long-term commitment to maintenance. Unlike off-the-shelf solutions, which are typically supported and updated by the vendor, custom-built models require continuous upkeep. This means that as your business grows and changes, your models must be retrained and applications updated to remain effective.

Over time, the environment in which your models operate will change, leading to a gradual decline in performance. For instance, new types of data, changes in the operating environment (even a foreign object, like a hand, occluding a critical area), or shifts in the tasks your models are designed to perform will impact their effectiveness. Addressing these issues requires ongoing monitoring, retraining, optimization against benchmarks, and adaptation to new technologies—tasks that can be complex, resource-heavy, and require specialized knowledge.

Computer Vision in an Ideal World

If you’re ready to make your cameras count, let’s talk.