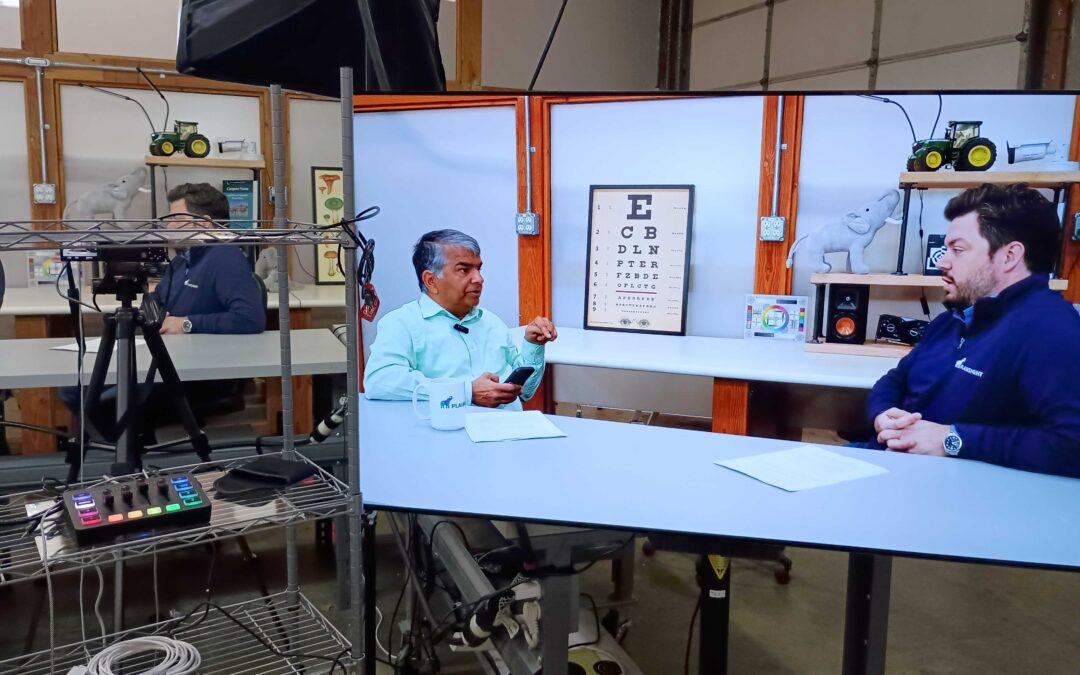

Generative AI has taken the world by storm in recent years, transforming industries and reshaping how businesses operate. At the forefront of this revolution is the pioneering work shared at re:Invent 2024, where the spotlight was on innovative solutions, emerging trends, and the exciting possibilities AI brings to enterprises. Larry Carvalho, Principal Consultant at RobustCloud, and Kit Merker, CEO at Plainsight, recently sat down to discuss Larry’s takeaways from re:Invent, generative AI advancements, the growing importance of model choices, and the critical role of deploying AI on edge devices.

Whether you’re an AI enthusiast or a professional eager to understand how these developments could elevate your organization, this article dives deep into the potential applications of enterprise AI, as explored in their conversation.

Generative AI at re:Invent 2024 – The Key Takeaways

Generative AI took center stage at re:Invent 2024. Amazon impressed attendees with game-changing innovations and a growing ecosystem backing their AI initiatives. According to Larry Carvalho, one of the major announcements revolved around Amazon Bedrock, a cloud-based service that allows developers to build generative artificial intelligence (AI) applications. Companies like Anthropic and Meta have committed to leveraging Amazon’s infrastructure for training and inferencing their AI workloads, offering flexibility and scalability to enterprise users.

This represents a significant win for organizations looking for seamless integration between AI models and cloud services. Amazon’s infrastructure also serves as a strategic advantage for customers who already store their data on AWS, making it easier to centralize operations and extract more value from their data.

Another noteworthy aspect from re:Invent was Amazon’s continued investment in reducing operational costs through proprietary chips like Trainium and Inferentia, a move likely to accelerate AI adoption across industries. This aligns with Amazon’s overarching goal of making enterprises ’AI-ready’ while lowering the barriers to entry.

The Emergence of Model Choices

During the conversation, Larry and Kit discussed an increasingly pressing question in the AI landscape—model choices. With generative AI evolving rapidly, businesses are presented with a growing range of large and small language models tailored for specific industries and use cases.

Larry highlighted an emerging trend where companies adopt domain-specific models to enhance efficiency in key areas such as automotive, manufacturing, and legal research. Instead of relying solely on massive, general-purpose AI models, businesses are turning to small, highly specialized models that have been fine-tuned to handle unique tasks, such as automating legal workflows, performing shop floor inspections, or even transforming legacy COBOL code to modern programming languages like Java.

A critical point was raised about potential issues like “model lock-in”, where organizations might feel tied to a specific model or proprietary ecosystem due to dependencies. Looking forward, Larry hypothesizes businesses will be able to choose between open-source AI and proprietary models, alleviating this concern, offering businesses more flexibility while keeping costs manageable.

The conversation also touched on an exciting frontier for AI—model supply chains. Enterprises may soon need robust strategies to source, evaluate, and adopt the right AI models for specific needs. The ability to seamlessly switch between models depending on the task at hand could become a defining feature for future-ready organizations.

AI on the Edge – Bringing Intelligence Closer to Action

The discussion naturally led to the growing role of edge AI in enterprise workflows. Kit explained that industries dealing with heavy data streams, such as video and image processing, are increasingly moving workloads to the edge rather than relying solely on cloud infrastructure.

For example, tasks requiring real-time processing, like store surveillance or industrial equipment monitoring, benefit greatly from edge deployments due to reduced latency and faster decision-making capabilities. According to Larry, this hybrid approach—splitting tasks between the edge and cloud—creates a highly efficient system where enterprise-grade AI can thrive.

Edge AI also lowers operational costs by optimizing hardware utilization and minimizing redundant data transfers to the cloud. For industries like retail, logistics, and manufacturing, this brings tangible benefits in terms of speed, scalability, and overall performance.

That said, deploying AI to the edge presents challenges, particularly around model training and connectivity limitations. These obstacles make it even more critical for companies to customize solutions, selecting the right model sizes and configurations to fit their unique operational environments.

The Impact of Generative AI on Industries

A standout point in Larry and Kit’s discussion was the transformative impact of AI on industry-specific solutions. For instance, in software development, generative AI tools such as Microsoft’s Copilot are enabling engineers to deliver features faster and more securely. Similarly, Salesforce is leveraging generative models to hyper-personalize customer experiences, showcasing the vast potential of AI agents across industries.

However, a recurring question in the AI community is value vs. cost. While AI tools undoubtedly introduce efficiencies, the operational costs associated with running AI systems—especially large-scale ones—can be prohibitive. Larry noted innovations such as caching and improved hardware efficiency as key areas enabling businesses to maximize ROI while minimizing costs.

The conversation also explored broader implications of AI for the workforce. Generative AI is shifting focus away from repetitive tasks, enabling human employees to engage in creative, high-value work. However, this transformation underscores the importance of learning to work alongside AI systems. Companies must lead with training and upskilling initiatives to help their teams harness AI’s full potential responsibly and effectively.

Seizing the AI Opportunity

Larry and Kit concluded their discussion by envisioning a future where advancements in generative AI, flexible model choices, and edge deployments converge to redefine how enterprises operate. Success in this dynamic landscape will hinge on businesses making informed decisions about their AI strategies, balancing innovation with cost efficiency, and aligning technology with organizational goals.

At its core, this conversation offers a glimpse into the exciting possibilities generative AI presents while acknowledging the challenges that lie ahead. The message for enterprises is clear—those who adapt early, experiment boldly, and stay open to evolving solutions are poised to unlock new levels of growth and innovation.

If your business is eager to explore AI-driven transformation, ask yourself—Do you have the right tools, models, and strategies in place to lead the charge? Check out the full interview on our YouTube and stay tuned for more insights from Plainsight’s Filter Lab!