Build & Run Reliable Computer Vision AI Applications

Turn video and image data into business impact faster and with greater confidence with a platform built for developers solving real-world problems across industries.

Speed Without Sacrificing Quality

Ensure trustworthy, production-ready computer vision through comprehensive, automated quality assurance built into every stage of the lifecycle.

Accelerate Time-to-Value

Go from prototype to deployment in hours with modular Filters and built-in runtimes that simplify every step of your vision pipeline.

Reduce Data Toil

Cut video wrangling by 90%. Automate formatting, annotation, and transformation to focus on results—not repetitive tasks.

Prototype to deployment in hours, not weeks.

Plainsight’s Platform reduces manual video wrangling by 90 percent, helping software developers massively accelerate computer vision application development and scale with confidence.

.png?width=100&height=100&name=license%20plate%20detection%20(11).png)

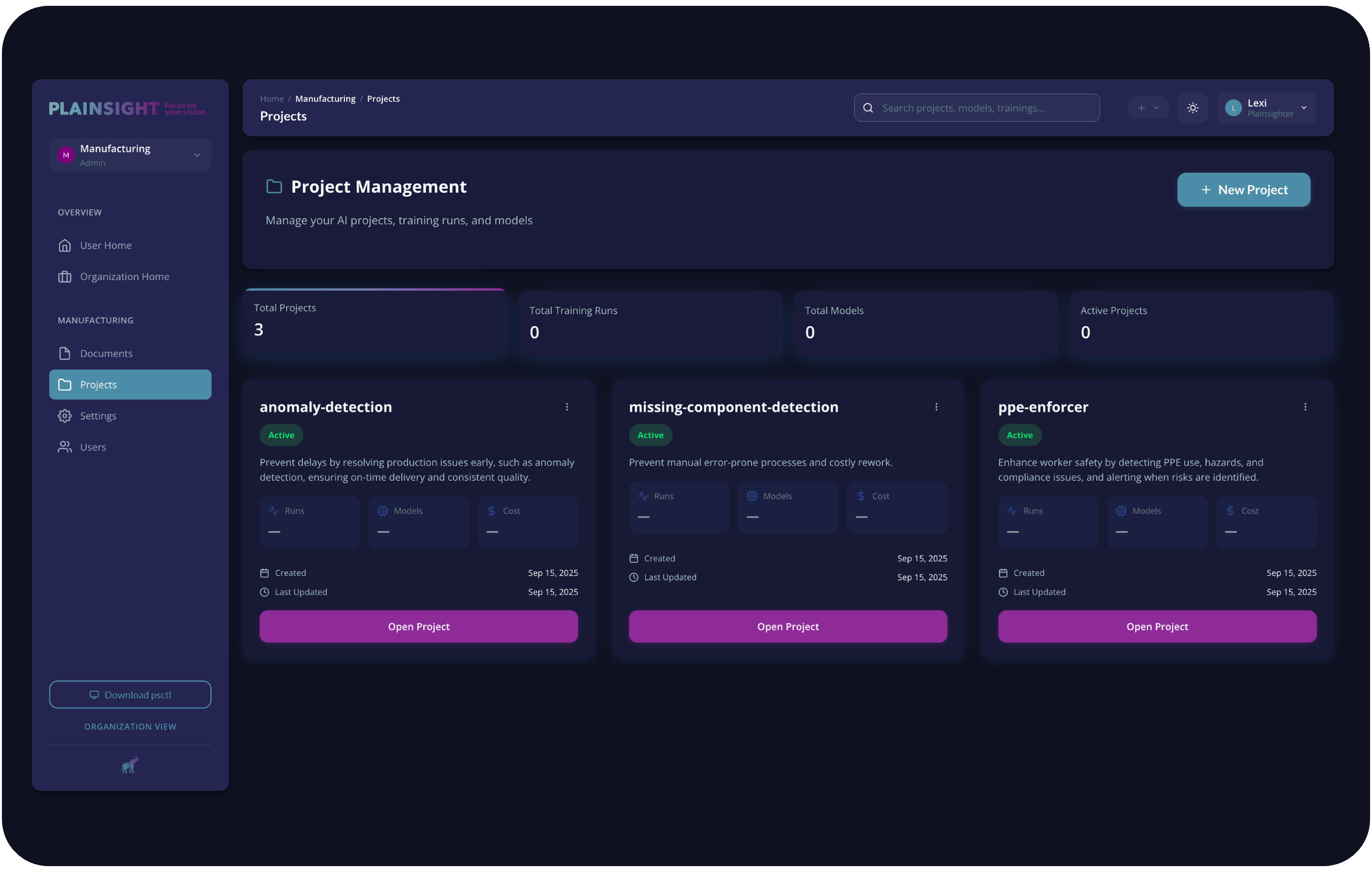

Plainsight Platform

Platform for managing the entire computer vision lifecycle and vision pipelines, from data collection and model training to deployment and monitoring.

OpenFilter Plus

Commercial support for the world's most popular open source computer vision workload management framework.

What can Plainsight help you do?

Build with OpenFilter

- Download & share modular Filters

- Join the Discord community

- Stay up to date on new Plainsight & OpenFilter releases and news

- Access the OpenFilter Runtime

- Deploy visual pipelines, your way, in your environment

.gif?width=1280&height=720&name=Shelf%20Count%20-%20Demo%20Days%20(1).gif)

Let's Scale Up Your Vision Together.

If you’re ready to build AI-powered vision workflows, don’t wait. Whatever industry you operate in, we can help you decide how best to pursue your vision.