Businesses across industries often have more visual data on hand than they know what to do with. After all, billions of images and hundreds of thousands of hours of video are created every day. Yet, despite their massive and increasing image and video collection, enterprises are struggling to extract business value from this data.

Hidden within this visual data are valuable insights that have the potential to transform operations in powerful ways, saving businesses significant time and money in the process. The challenge, however, is not a lack of understanding around what computer vision technology can deliver, but the market complexity that blocks creation of successful, production-ready vision AI solutions in the first place.

Regardless of industry or sector, any business that uses cameras today can immediately put the images and video they capture to work to uncover areas for optimization and real-time analysis. All that’s needed is the imagination and understanding of the problems to solve, and a proven partner to guide them—whether business teams have technical expertise or not—through the machine learning lifecycle.

In this post, we’ll define vision AI, what it means for different businesses and stakeholders, and how any user can get started executing their computer vision project today.

What is Vision AI?

Vision AI involves training computers to replicate human vision and situational awareness using machine learning (ML) principles and techniques.

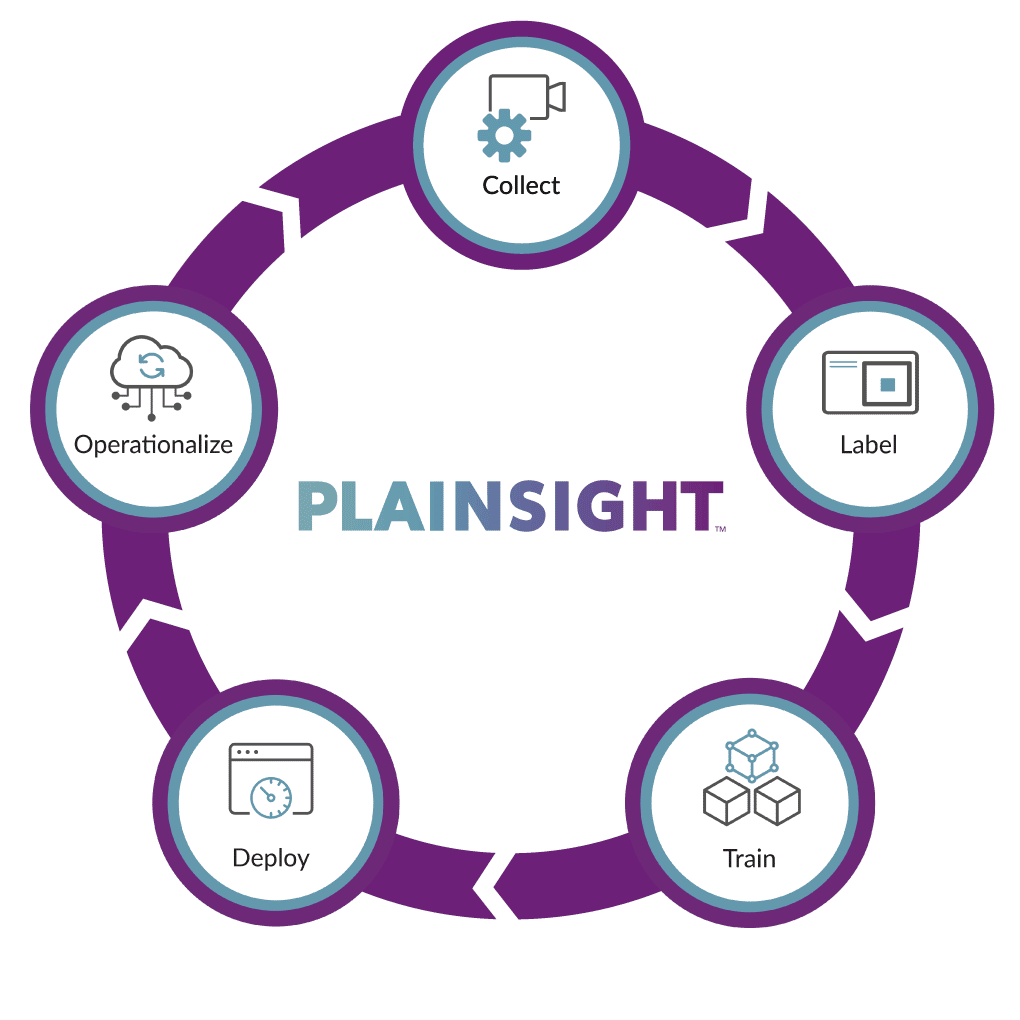

To create a vision AI application, practitioners must start by labeling images, video, and other visual data with the context necessary for machines (or machine learning models) to derive actionable insights. Once data labeling is complete, the models then continue to learn from those applied annotations. To ensure long-term accuracy in production, human practitioners apply new and more accurate labels as more visual data and context is obtained.

From there, a platform can be leveraged for deployment that allows users to easily iterate, monitor, optimize and successfully operationalize computer vision models in production.

Streamlined computer vision for end-to-end application development.

Vision AI as we know it today has evolved from the concept of computer vision that was first established in 1959 with the publication of Receptive fields of single neurones in the cat’s striate cortex by neurophysiologists David Hubel and Torsten Wiesel . Through a series of happy accidents, Hubel and Wiesel were able to determine that image processing in a cat’s brain—used here as a proxy for both human and computer vision—begins with simple shapes and structures, like oriented edges and straight lines.

The principles established with this study would go on to help define the concept of deep learning. But Hubel and Wiesel’s report also dovetailed with the development of the first image scanning technologies, as well as the dawn of 3-D computing, which in concert helped establish AI as its own field of academic study.

While early researchers in the 1960s anticipated that it would take no more than 25 years to develop computers that are as intelligent as humans, scientists are still working toward that goal roughly 60 years later. Neuroscientist David Marr was able to create algorithms for machines to detect basic shapes as early as 1982, but it wasn’t until 2001 that the first real-time facial recognition applications became available.

While computer vision was viewed as a deeply technical concept for much of the past half century, modern vision AI can be achieved with the help of platforms and professional services that assist businesses and teams with any level of technical expertise.

With the barrier to adopting computer vision lower today than ever before, practitioners across industries can start deploying vision AI to optimize processes in myriad settings.

Why is Vision AI important?

By harnessing the power of visual data, businesses gain a better understanding of their operations and processes that may be difficult to monitor and track accurately first-hand. In a factory setting, for instance, cameras used for general security practices can also be the source of visual inputs that improve product quality, worker health and safety or even enhance productivity.

By training data models to recognize signs of hazards such as gas leaks, foreign objects, and/or contaminants, for instance, remote teams can set up automated alerts that empower stakeholders to react in near-real-time and develop proactive remediation processes.

Even in “front-of-house” hospitality and retail settings, teams can leverage cameras on the floor to better understand customer flow and help optimize for service and sales as well as venue and facilities layouts. Depending on the situation, users have the power to deploy more active, task-specific vision AI solutions too, stationing cameras in strategic locations with the sole purpose of feeding continuous data into a computer vision workflow.

Tools that make deploying these applications more seamless (while helping teams operationalize and optimize their models in use) blow wide open the possibilities for potential use cases: All someone needs is access to visual data to start making their computer vision dreams a reality.